Architecture

Data Model

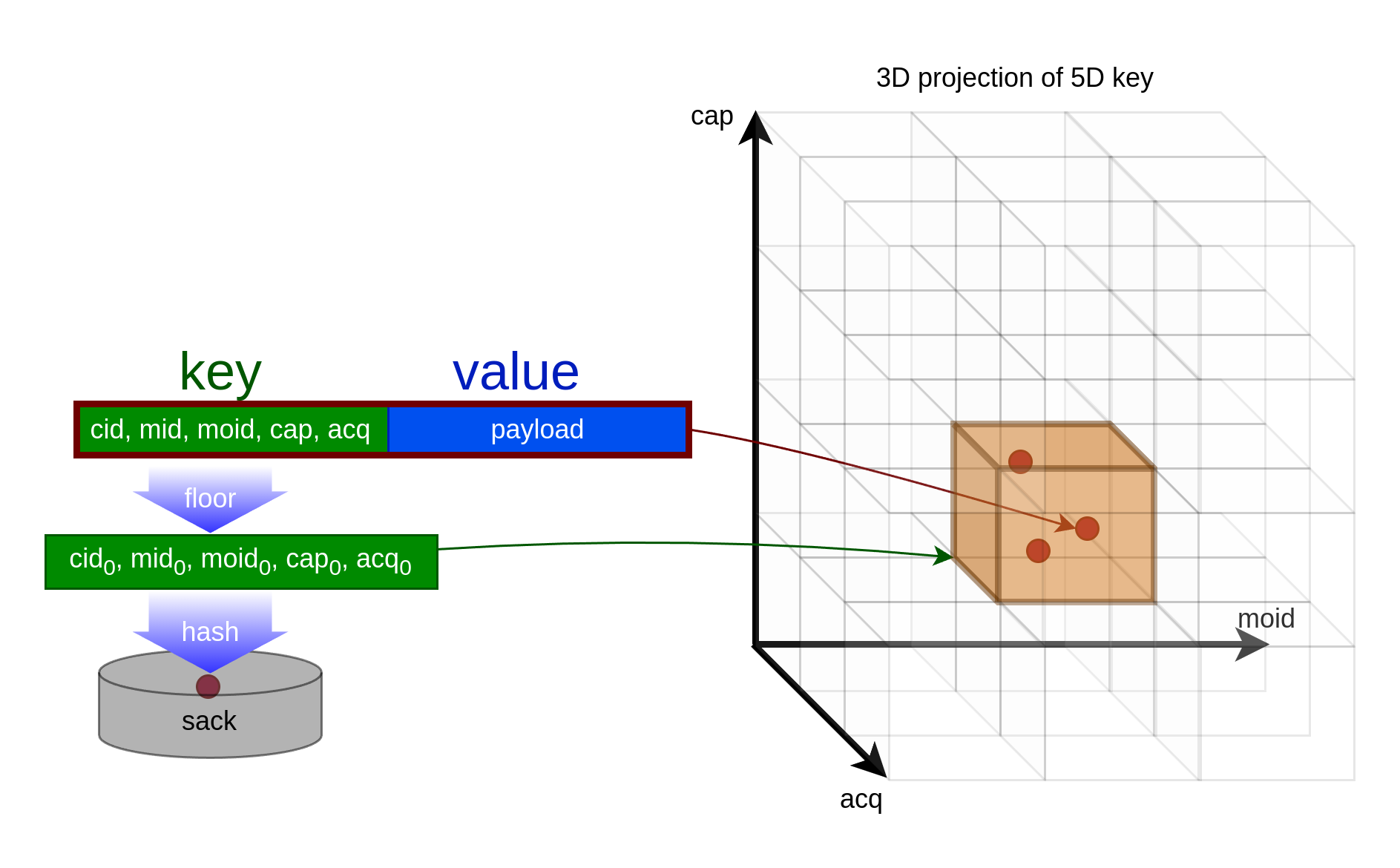

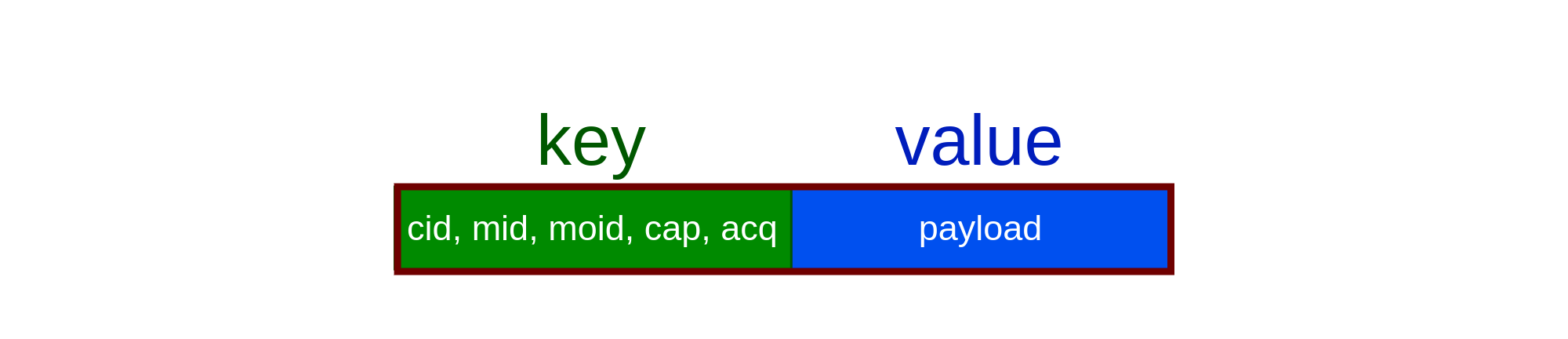

Each record in TStorage is a key-value pair, where the key is a 32-byte identifier. Each key is divided into 5 components that can be used for querying data.

TStorage is designed to store data that can be described as the result of measurement, e.g., data acquired by the IoT device. The structure of the key is motivated by the observation that the measurement can be uniquely identified by 5 quantities:

cid - client id, identifier of the cluster user. Can be interpreted as the identifier for a group of IoT devices,

mid - meter id, identifier of the device the measurement is an output,

moid - meter object id, identifier of the measured quantity,

cap - capture time, time when the measurement was captured by the measuring device in nanoseconds,

acq - acquisition time, time when the measurement was acquired by the TStorage in nanoseconds.

The tuple (cid, mid, moid, cap, acq) constitutes a key which, together with a value, represents data record (see Figure 1). The value is meant to contain result of measurements (possibly composed, e.g., matrix), but it can be anything, in any form (also compressed or encrypted). Observe that even images and videos can be collected as data records. The only limit is the record’s size - 32MB.

Note that this interpretation only serves as a motivation to introduce 5 component key. Components can be interpreted differently depending on the use case.

Key component |

Type |

Description |

Comments |

|---|---|---|---|

cid |

in32+ |

client id |

Non-negative 32-bit integer |

mid |

int64 |

meter id |

|

moid |

int32 |

meter object id |

|

cap |

int64 |

capture time |

By convention this component should be interpreted as a timestamp in nanoseconds since 2001-01-01T00:00:00 UTC |

acq |

int64 |

acquisition time |

It is generated automatically by TStorage and has the interpretation of a timestamp in nanoseconds since 2001-01-01T00:00:00 UTC |

Table 1. Description of key dimensions.

Writing data

Write operation, later referred to as PUT request, saves a list of records in TStorage, where each record is identified by (cid, mid, moid, cap) key components. The last key component, acq, is automatically generated by TStorage during PUT to guarantee consistency of read operations. TStorage’s consistency guarantees are described in the Data Consistency section.

TStorage keeps keeps track of all versions of records. New record with the same (cid, mid, moid, cap) key components as some record that is already persisted in TStorage is written with bigger acq timestamp and can be interpreted as the new version of this record.

Atomicity of the PUT request is guaranteed only at a record level, i.e., a given record either was written entirely to the persistent storage or was discarded. It also means that the failed write request with more than one record to save might be partially written to the storage.

In one PUT request all records should have unique (cid, mid, moid, cap) key. Putting duplicates in a single PUT request is considered illegal and may result in an undefined behaviour.

NOTE: In some use cases, especially data backup, there is the need to write data to TStorage with acq key component explicitly set. TStorage supports this use case by having special method PUTA for it, but user must be aware that in this case there are no consistency guarantees between read and write requests.

Reading data

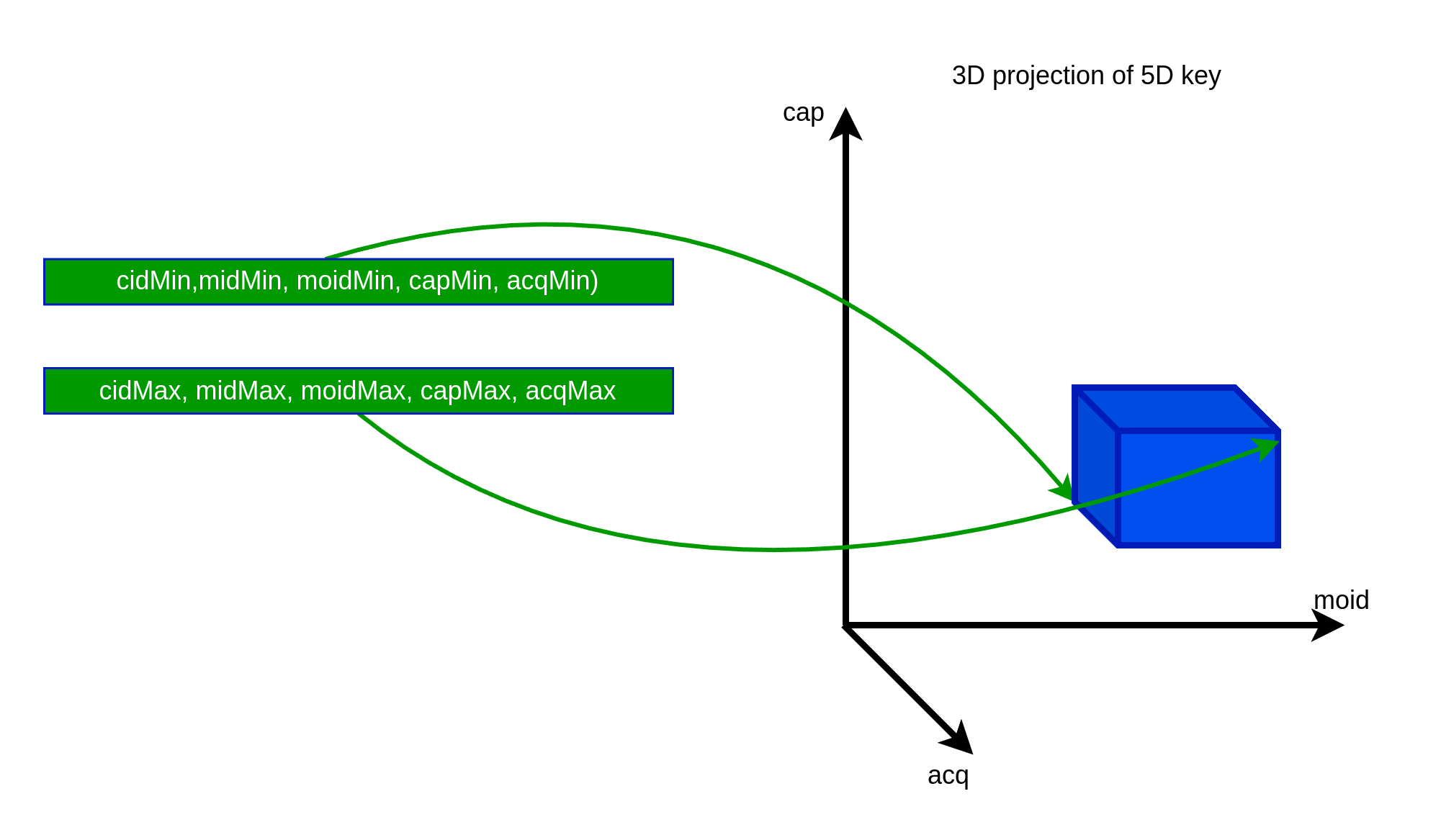

TStorage read operations, later referred to as GET requests, are realized by so-called range queries. In order to get data from TStorage the user defines subset of keys in the 5 dimensional key component space by specifying minimum and maximum values in each dimension of (cid, mid, moid, cap, acq) key tuple (see Figure 2.). One can interpret it as defining 5D cuboid in key dimensions by two vertices - keyMin, keyMax, where all components of keyMin are smaller than matching components of keyMax.

GET(keyMin, keyMax) returns all records with keys components that lie between keyMin and keyMax, i.e.,

keyMin.cid &\le key.cid < keyMax.cid\\ keyMin.mid &\le key.mid < keyMax.mid\\ keyMin.moid &\le key.moid < keyMax.moid\\ keyMin.cap &\le key.cap < keyMax.cap\\ keyMin.acq &\le key.acq < keyMax.acq

There is a caveat to this rule when one set the value of keyMax.acq near the current time or in the future which will be explained in the Data Consistency section.

Data Consistency

NOTE Consistency guarantees described here are only true when no PUTA requests are performed.

NOTE Be advised that data consistency will be broken by data retention mechanism (explained in Administration section).

TStorage provides consistency guarantees for GET and PUT requests. It might happen that GET requests wants to read data from the same key range in which some PUT request is writing data. In general, it is not obvious what user might expect as a result of these GET operations. TStorage provides following consistency guarantees to facilitate correct data processing in this case:

Each successful GET request returns information about last acq - acq0, data version to which TStorage guarantees that all data are persisted in a given key range and no new data will be persisted in this range in the future. New data for that range will be stamped with higher version of acq time than acq0. Records returned by GET request are limited to key range with “trimmed” acqMax time to acq0.

Each subsequent GET request with the same range trimmed to acq0 will return the same result.

For two GET operations performed one after another, if the second GET request has a key range that is a subset of the first one’s key range, then the second GET returns all records returned by the first GET in that range.

Please note that above statements apply only under normal system operation.

TStorage interface

TStorage has the following methods to read and write data:

GET(keyMin, keyMax) - reads records from 5 dimensional key range given by (keyMin keyMax) tuple.

GET_ACQ(keyMin, keyMax) - returns acq0 - trimmed acq value for the key range given by (keyMin keyMax) tuple.

PUT(records) - Writes records to TStorage. TStorage manages assigning acq key component to each record.

PUTA(records) - Writes records to TStorage. Client is responsible for assigning acq key component to each record.

System Design

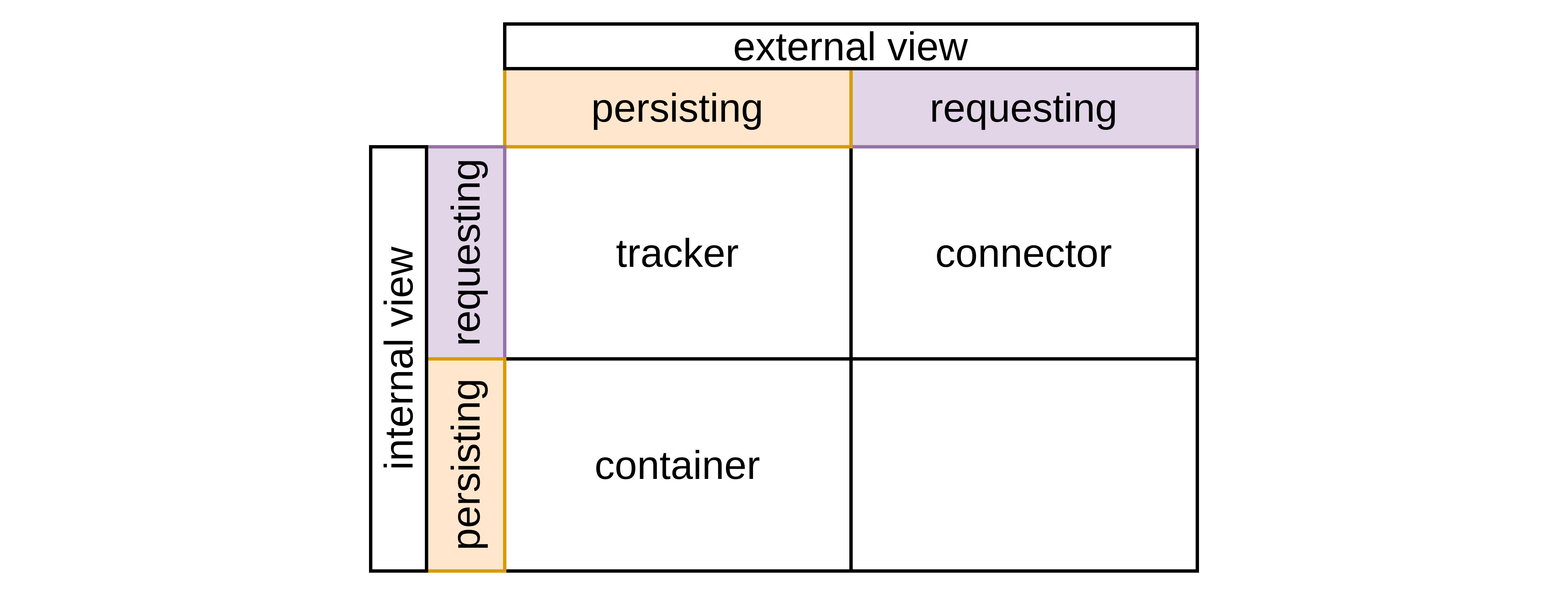

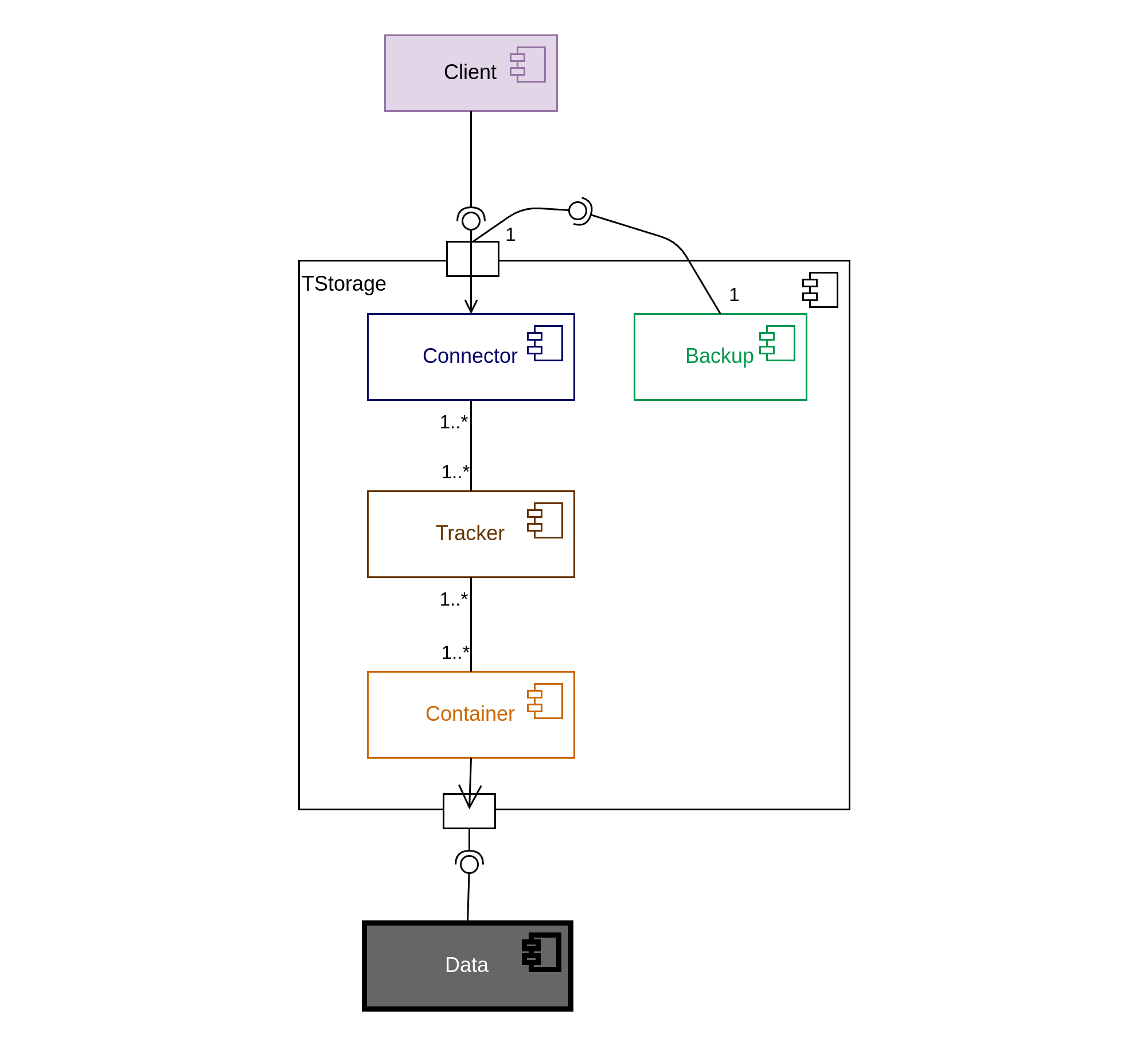

TStorage can be divided into four main components + set of tools:

Connector - serves as an interface between an external user and the remaining part of the system.

Tracker - supports data consistency.

Container - responsible for persisting data and managing communication with file system.

DTimestamp - serves timestamps used for data versioning.

Request processing

Each request is processed by three components, namely connector, tracker, and container. Connector serves as an interface between an external user and the remaining part of the system. It essentially accepts two types of requests: GET and PUT. Conceptually, connector reads/writes data from/to a subset of virtual disks (later related to as MSacks). They are governed by system components named trackers. Intermediating in data fetching and persistence, trackers implement declared consistency level. On the other hand, trackers act as clients from the perspective of containers. This dependency between components is summarized in Figure 3. Containers, in turn, governs real disks (later related to as sacks), as shown in Figure 4. The above-mentioned components also expose interface for maintenance tasks as: instant log flush to disk, block/unblock partition delete. Additionally, the system component depicted as Backup in Figure 4 allows offline data synchronization between different system instances. It is meant to transfer data between different data centers on a schedule basis.

Stored data are organized by key ranges (see Figure 5). Except for the data records itself, information about the occurrence of data is persisted. This information has a form of range of keys as shown in Figure 6.

Within the ranges one can expect to find data. Both, data records and ranges are stored in sacks that abstracts real disks.

The space of keys is divided into equally sized cuboids:

5-dimensional (cid, mid, moid, cap, acq) for data records and ranges stored on sacks. Cuboids meant to group ranges are of bigger size, than those for data records.

4-dimensional (cid, mid, moid, cap) for msacks.

Once the data (data records or ranges) are assigned to a cuboid, the cuboid itself is assigned to the corresponding sack or msack via a hash function.